Hi,

I used lookup table in my taining data as follows: “lookup_tables”: [ { “name”: “road”, “elements”: “data/cuisine_list.json” } ],

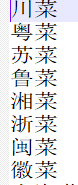

and have original Chinese characters in cuisine_list.json with ‘utf-8’,

and I did nlu trainining, I always encounted the error information when intent_entity_featurizer_regex: UnicodeDecodeError: ‘gbk’ codec can’t decode byte 0x9c in position 72: illegal multibyte sequence

Colud you help me to solve the problem? Thanks.

Juven