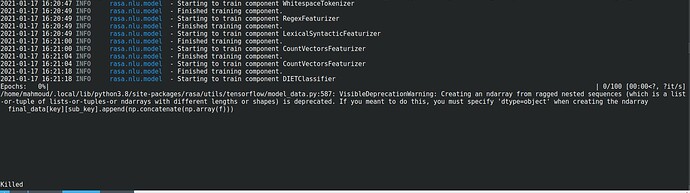

Hello, I got a problem when training DIET

Training set size: 7 intents, 15k examples

Config: language: en

pipeline:

- name: WhitespaceTokenizer

- name: RegexFeaturizer

- name: LexicalSyntacticFeaturizer

- name: CountVectorsFeaturizer analyzer: char_wb min_ngram: 1 max_ngram: 4

- name: DIETClassifier epochs: 100

- name: EntitySynonymMapper

- name: FallbackClassifier threshold: 0.9 ambiguity_threshold: 0.1